## Summary

This PR fixes an asymmetry issue where files saved with `file://`

prefixes could not be read back, causing "file not found" errors.

## Problem

The Cognee framework has a bug where:

- `save_data_to_file.py` adds `file://` prefix when saving files

- `open_data_file.py` doesn't handle the `file://` prefix when reading

files

- This causes saved files to appear as "lost" with cryptic "file not

found" errors

## Solution

Added proper handling for `file://` URLs in `open_data_file.py` by:

- Checking if the file path starts with `"file://"`

- Stripping the prefix using `replace("file://", "", 1)`

- Following the same pattern as S3 URL handling

## Changes

- Modified

`cognee/modules/data/processing/document_types/open_data_file.py` to

handle `file://` URLs

- Added comprehensive unit tests in

`cognee/tests/unit/modules/data/test_open_data_file.py`

## Testing

Added 6 test cases covering:

- Regular file paths (ensuring backward compatibility)

- file:// URLs in text mode

- file:// URLs in binary mode

- file:// URLs with specific encoding

- Nonexistent files with file:// URLs

- Edge case with multiple file:// prefixes

All tests pass successfully.

## Notes

- This is a minimal fix that maintains backward compatibility

- The fix follows the existing pattern used for S3 URL handling

- No breaking changes to the API

I affirm that all code in every commit of this pull request conforms to

the terms of the Topoteretes Developer Certificate of Origin.

Signed-off-by: Hashem Aldhaheri <aenawi@gmail.com>

|

||

|---|---|---|

| .dlt | ||

| .github | ||

| alembic | ||

| assets | ||

| bin | ||

| cognee | ||

| cognee-frontend | ||

| cognee-mcp | ||

| deployment | ||

| evals | ||

| examples | ||

| licenses | ||

| logs | ||

| notebooks | ||

| tools | ||

| .dockerignore | ||

| .env.template | ||

| .gitattributes | ||

| .gitignore | ||

| .pre-commit-config.yaml | ||

| .pylintrc | ||

| alembic.ini | ||

| CODE_OF_CONDUCT.md | ||

| cognee-gui.py | ||

| CONTRIBUTING.md | ||

| CONTRIBUTORS.md | ||

| DCO.md | ||

| docker-compose.yml | ||

| Dockerfile | ||

| Dockerfile_modal | ||

| entrypoint.sh | ||

| LICENSE | ||

| modal_deployment.py | ||

| mypy.ini | ||

| NOTICE.md | ||

| poetry.lock | ||

| pyproject.toml | ||

| README.md | ||

| SECURITY.md | ||

| uv.lock | ||

cognee - Memory for AI Agents in 5 lines of code

Demo . Learn more · Join Discord · Join r/AIMemory

🚀 We are launching Cognee SaaS: Sign up here for the hosted beta!

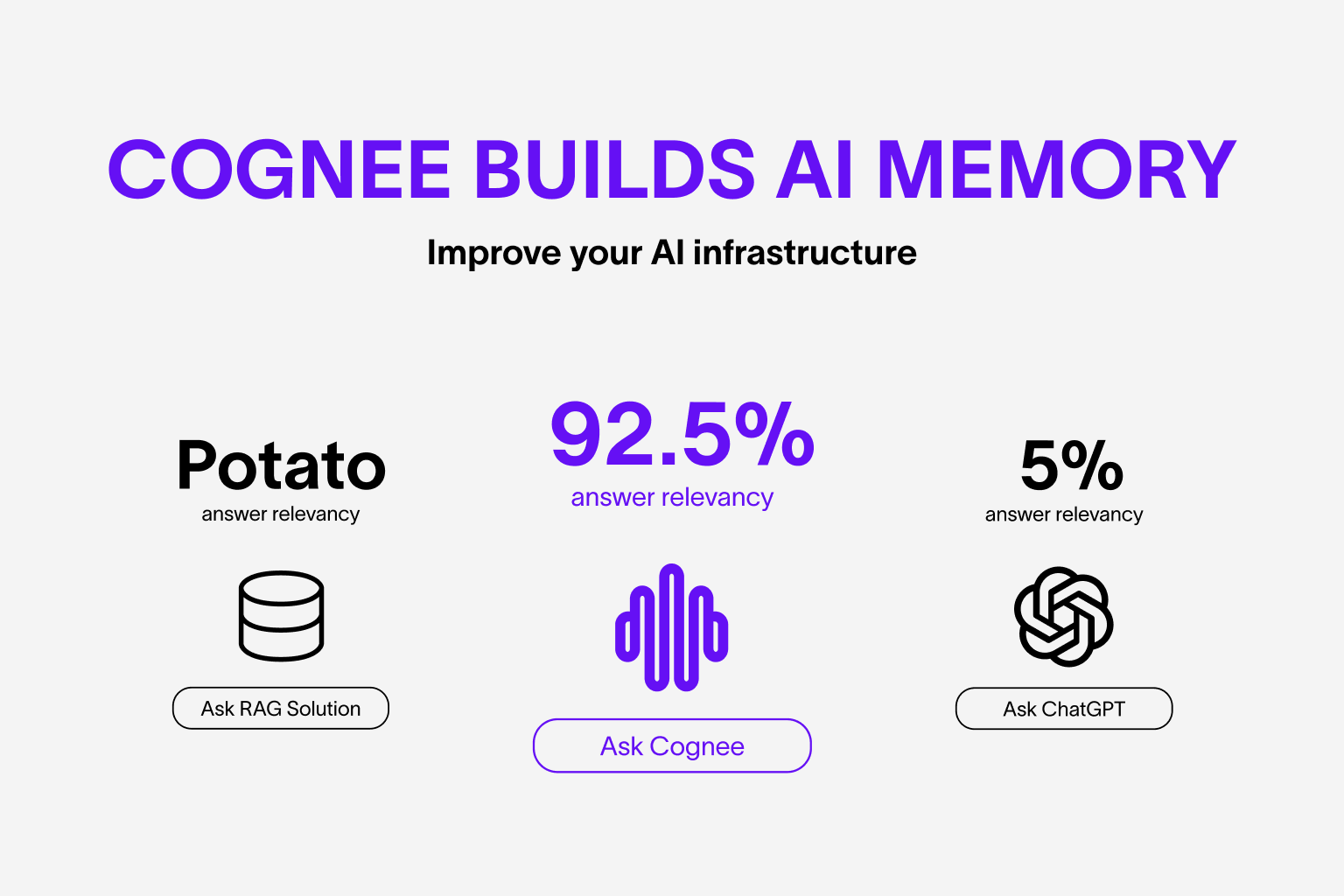

Build dynamic memory for Agents and replace RAG using scalable, modular ECL (Extract, Cognify, Load) pipelines.

🌐 Available Languages : Deutsch | Español | français | 日本語 | 한국어 | Português | Русский | 中文

Features

- Interconnect and retrieve your past conversations, documents, images and audio transcriptions

- Replaces RAG systems and reduces developer effort, and cost.

- Load data to graph and vector databases using only Pydantic

- Manipulate your data while ingesting from 30+ data sources

Get Started

Get started quickly with a Google Colab notebook , Deepnote notebook or starter repo

Contributing

Your contributions are at the core of making this a true open source project. Any contributions you make are greatly appreciated. See CONTRIBUTING.md for more information.

📦 Installation

You can install Cognee using either pip, poetry, uv or any other python package manager. Cognee supports Python 3.8 to 3.12

With pip

pip install cognee

Local Cognee installation

You can install the local Cognee repo using pip, poetry and uv. For local pip installation please make sure your pip version is above version 21.3.

with UV with all optional dependencies

uv sync --all-extras

💻 Basic Usage

Setup

import os

os.environ["LLM_API_KEY"] = "YOUR OPENAI_API_KEY"

You can also set the variables by creating .env file, using our template. To use different LLM providers, for more info check out our documentation

Simple example

This script will run the default pipeline:

import cognee

import asyncio

async def main():

# Add text to cognee

await cognee.add("Natural language processing (NLP) is an interdisciplinary subfield of computer science and information retrieval.")

# Generate the knowledge graph

await cognee.cognify()

# Query the knowledge graph

results = await cognee.search("Tell me about NLP")

# Display the results

for result in results:

print(result)

if __name__ == '__main__':

asyncio.run(main())

Example output:

Natural Language Processing (NLP) is a cross-disciplinary and interdisciplinary field that involves computer science and information retrieval. It focuses on the interaction between computers and human language, enabling machines to understand and process natural language.

Our paper is out! Read here

Cognee UI

You can also cognify your files and query using cognee UI.

Try cognee UI out locally here.

Understand our architecture

Demos

- What is AI memory:

- Simple GraphRAG demo

- cognee with Ollama

Code of Conduct

We are committed to making open source an enjoyable and respectful experience for our community. See CODE_OF_CONDUCT for more information.