<!-- .github/pull_request_template.md --> ## Description <!-- Provide a clear description of the changes in this PR --> This PR implements the 'FEELING_LUCKY' search type, which intelligently routes user queries to the most appropriate search retriever, addressing [#1162](https://github.com/topoteretes/cognee/issues/1162). - implement new search type FEELING_LUCKY - Add the select_search_type function to analyze queries and choose the proper search type - Integrate with an LLM for intelligent search type determination - Add logging for the search type selection process - Support fallback to RAG_COMPLETION when the LLM selection fails - Add tests for the new search type ## How it works When a user selects the 'FEELING_LUCKY' search type, the system first sends their natural language query to an LLM-based classifier. This classifier analyzes the query's intent (e.g., is it asking for a relationship, a summary, or a factual answer?) and selects the optimal SearchType, such as 'INSIGHTS' or 'GRAPH_COMPLETION'. The main search function then proceeds using this dynamically selected type. If the classification process fails, it gracefully falls back to the default 'RAG_COMPLETION' type. ## Testing Tests can be run with: ```bash python -m pytest cognee/tests/unit/modules/search/search_methods_test.py -k "feeling_lucky" -v ``` ## DCO Affirmation I affirm that all code in every commit of this pull request conforms to the terms of the Topoteretes Developer Certificate of Origin. Signed-off-by: EricXiao <taoiaox@gmail.com> |

||

|---|---|---|

| .github | ||

| alembic | ||

| assets | ||

| bin | ||

| cognee | ||

| cognee-frontend | ||

| cognee-mcp | ||

| cognee-starter-kit | ||

| deployment | ||

| distributed | ||

| evals | ||

| examples | ||

| licenses | ||

| logs | ||

| notebooks | ||

| tools | ||

| .dockerignore | ||

| .env.template | ||

| .gitattributes | ||

| .gitguardian.yml | ||

| .gitignore | ||

| .pre-commit-config.yaml | ||

| .pylintrc | ||

| alembic.ini | ||

| CODE_OF_CONDUCT.md | ||

| cognee-gui.py | ||

| CONTRIBUTING.md | ||

| CONTRIBUTORS.md | ||

| DCO.md | ||

| docker-compose.yml | ||

| Dockerfile | ||

| entrypoint.sh | ||

| LICENSE | ||

| mypy.ini | ||

| NOTICE.md | ||

| poetry.lock | ||

| pyproject.toml | ||

| README.md | ||

| SECURITY.md | ||

| uv.lock | ||

cognee - Memory for AI Agents in 5 lines of code

Demo . Learn more · Join Discord · Join r/AIMemory . Docs . cognee community repo

🚀 We launched Cogwit beta (Fully-hosted AI Memory): Sign up here! 🚀

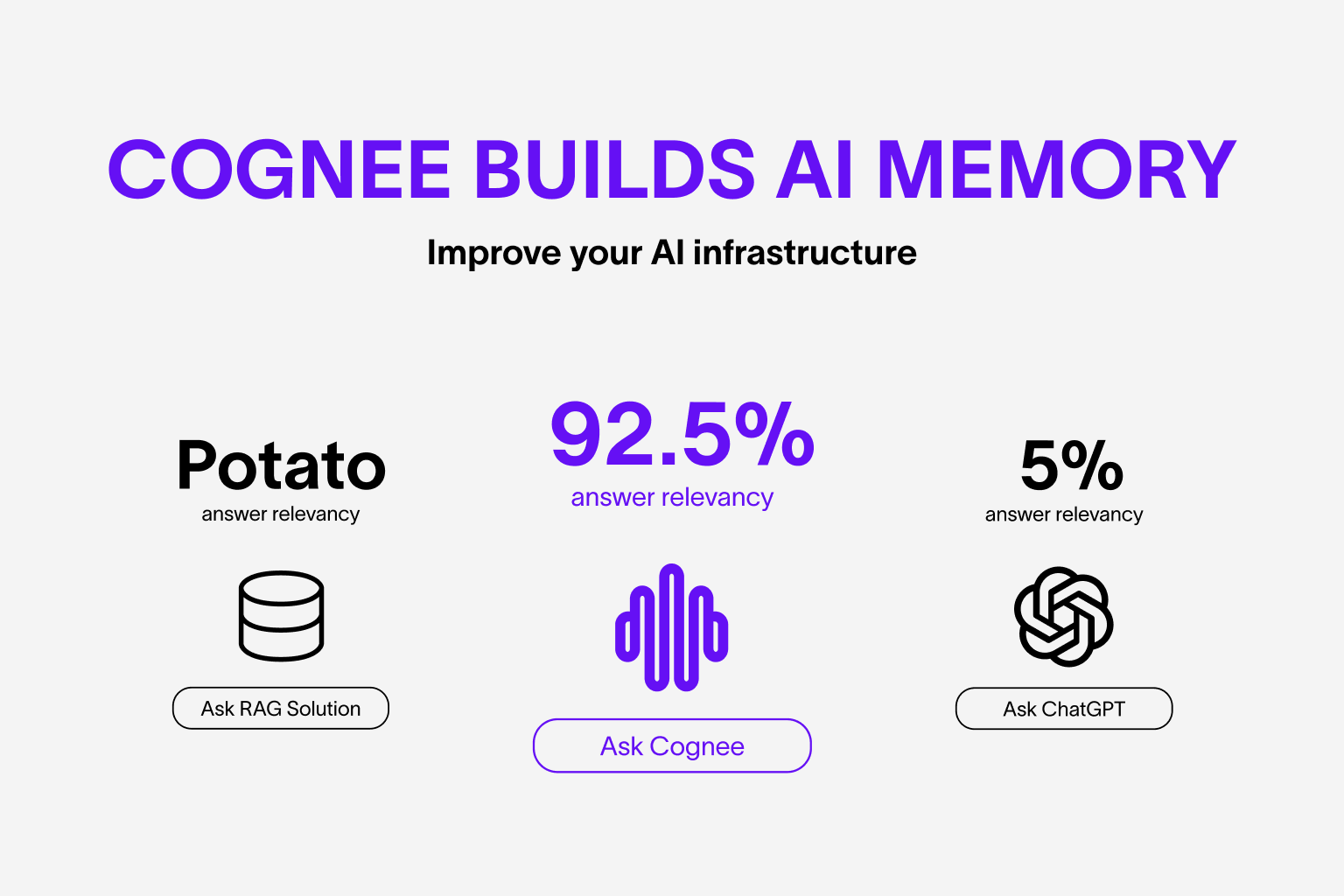

Build dynamic memory for Agents and replace RAG using scalable, modular ECL (Extract, Cognify, Load) pipelines.

🌐 Available Languages : Deutsch | Español | français | 日本語 | 한국어 | Português | Русский | 中文

Features

- Interconnect and retrieve your past conversations, documents, images and audio transcriptions

- Replaces RAG systems and reduces developer effort, and cost.

- Load data to graph and vector databases using only Pydantic

- Manipulate your data while ingesting from 30+ data sources

Get Started

Get started quickly with a Google Colab notebook , Deepnote notebook or starter repo

Contributing

Your contributions are at the core of making this a true open source project. Any contributions you make are greatly appreciated. See CONTRIBUTING.md for more information.

📦 Installation

You can install Cognee using either pip, poetry, uv or any other python package manager. Cognee supports Python 3.8 to 3.12

With pip

pip install cognee

Local Cognee installation

You can install the local Cognee repo using pip, poetry and uv. For local pip installation please make sure your pip version is above version 21.3.

with UV with all optional dependencies

uv sync --all-extras

💻 Basic Usage

Setup

import os

os.environ["LLM_API_KEY"] = "YOUR OPENAI_API_KEY"

You can also set the variables by creating .env file, using our template. To use different LLM providers, for more info check out our documentation

Simple example

This script will run the default pipeline:

import cognee

import asyncio

async def main():

# Add text to cognee

await cognee.add("Natural language processing (NLP) is an interdisciplinary subfield of computer science and information retrieval.")

# Generate the knowledge graph

await cognee.cognify()

# Query the knowledge graph

results = await cognee.search("Tell me about NLP")

# Display the results

for result in results:

print(result)

if __name__ == '__main__':

asyncio.run(main())

Example output:

Natural Language Processing (NLP) is a cross-disciplinary and interdisciplinary field that involves computer science and information retrieval. It focuses on the interaction between computers and human language, enabling machines to understand and process natural language.

Our paper is out! Read here

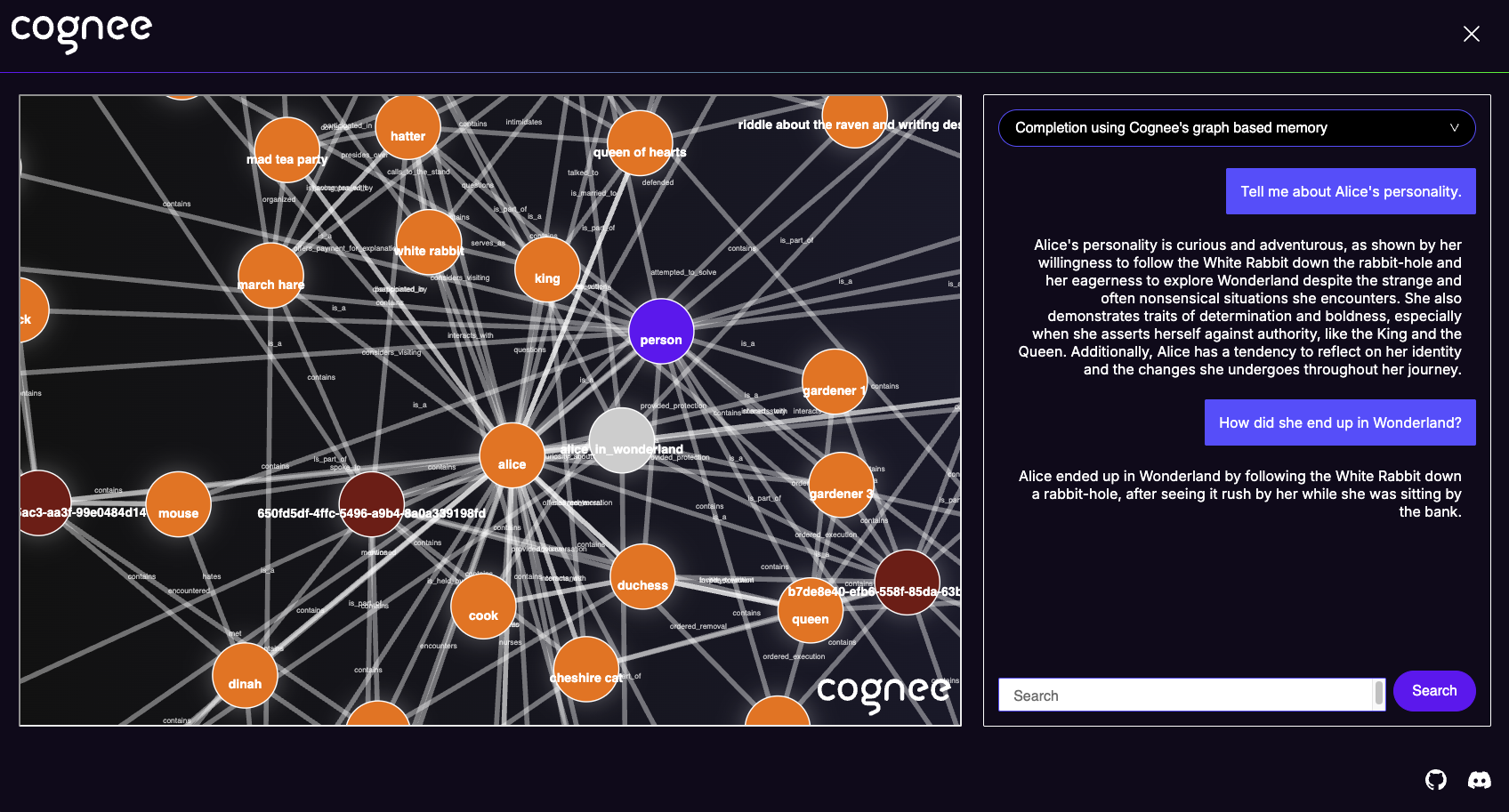

Cognee UI

You can also cognify your files and query using cognee UI.

Try cognee UI out locally here.

Understand our architecture

Demos

- Cogwit Beta demo:

- Simple GraphRAG demo

- cognee with Ollama

Code of Conduct

We are committed to making open source an enjoyable and respectful experience for our community. See CODE_OF_CONDUCT for more information.