<!-- .github/pull_request_template.md --> ## Description <!-- Please provide a clear, human-generated description of the changes in this PR. DO NOT use AI-generated descriptions. We want to understand your thought process and reasoning. --> Fixes https://github.com/topoteretes/cognee/issues/1681 We do not support AWS out of the box in MCP right now. This PR enables users to select what extras they want to start their `cognee-mcp` with, so users can extend MCP if need be. ## Testing 1. Added `AWS_REGION`, `AWS_ACCESS_KEY_ID`, and `AWS_SECRET_ACCESS_KEY` into `.env` 2. Uploaded completely [generated story](https://github.com/topoteretes/cognee-community/blob/main/packages/hybrid/duckdb/examples/simple_document_example/data/generated_story.txt) into S3 3. Started mcp, using MCP inspector called `cognify` with s3 path to the generated story <img width="780" height="703" alt="Screenshot 2025-10-29 at 17 08 10" src="https://github.com/user-attachments/assets/47157591-7243-4e87-befb-fb7ca6f3cf61" /> 4. Called "search" tool with story-specific question <img width="776" height="717" alt="Screenshot 2025-10-29 at 17 08 34" src="https://github.com/user-attachments/assets/64ab25f4-80d6-4f8a-a982-17c2191c6503" /> ### Logs <details> ``` daulet@Mac cognee-claude % docker run \ -e TRANSPORT_MODE=sse \ -e EXTRAS=aws,postgres,neo4j \ --env-file ./.env \ -p 8121:8000 \ --rm -it cognee/cognee-mcp:custom-deps Debug mode: Environment: Installing optional dependencies: aws,postgres,neo4j Current cognee version: 0.3.7 Installing cognee with extras: aws,postgres,neo4j Running: uv pip install 'cognee[aws,postgres,neo4j]==0.3.7' Resolved 137 packages in 679ms Prepared 7 packages in 484ms Installed 7 packages in 86ms + aiobotocore==2.25.1 + aioitertools==0.12.0 + boto3==1.40.61 + botocore==1.40.61 + jmespath==1.0.1 + s3fs==2025.3.2 + s3transfer==0.14.0 ✓ Optional dependencies installation completed Transport mode: sse Debug port: 5678 HTTP port: 8000 Direct mode: Using local cognee instance Running database migrations... 2025-10-29T16:56:01.562650 [info ] Logging initialized [cognee.shared.logging_utils] cognee_version=0.3.7 database_path=/app/.venv/lib/python3.12/site-packages/cognee/.cognee_system/databases graph_database_name= os_info='Linux 6.12.5-linuxkit (#1 SMP Tue Jan 21 10:23:32 UTC 2025)' python_version=3.12.12 relational_config=cognee_db structlog_version=25.4.0 vector_config=lancedb 2025-10-29T16:56:01.562816 [info ] Database storage: /app/.venv/lib/python3.12/site-packages/cognee/.cognee_system/databases [cognee.shared.logging_utils] 2025-10-29T16:56:01.782204 [warning ] Failed to import protego, make sure to install using pip install protego>=0.1 [cognee.shared.logging_utils] 2025-10-29T16:56:01.782403 [warning ] Failed to import playwright, make sure to install using pip install playwright>=1.9.0 [cognee.shared.logging_utils] Database migrations done. Starting Cognee MCP Server with transport mode: sse 2025-10-29T16:56:06.184893 [info ] Logging initialized [cognee.shared.logging_utils] cognee_version=0.3.7 database_path=/app/.venv/lib/python3.12/site-packages/cognee/.cognee_system/databases graph_database_name= os_info='Linux 6.12.5-linuxkit (#1 SMP Tue Jan 21 10:23:32 UTC 2025)' python_version=3.12.12 relational_config=cognee_db structlog_version=25.4.0 vector_config=lancedb 2025-10-29T16:56:06.185069 [info ] Database storage: /app/.venv/lib/python3.12/site-packages/cognee/.cognee_system/databases [cognee.shared.logging_utils] 2025-10-29T16:56:06.245181 [warning ] Failed to import protego, make sure to install using pip install protego>=0.1 [cognee.shared.logging_utils] 2025-10-29T16:56:06.245327 [warning ] Failed to import playwright, make sure to install using pip install playwright>=1.9.0 [cognee.shared.logging_utils] 2025-10-29T16:56:06.582115 [info ] Cognee client initialized in direct mode [cognee.shared.logging_utils] 2025-10-29T16:56:06.582268 [info ] Starting MCP server with transport: sse [cognee.shared.logging_utils] 2025-10-29T16:56:06.582618 [info ] Running MCP server with SSE transport on 0.0.0.0:8000 [cognee.shared.logging_utils] INFO: Started server process [1] INFO: Waiting for application startup. INFO: Application startup complete. INFO: Uvicorn running on http://0.0.0.0:8000 (Press CTRL+C to quit) INFO: 192.168.65.1:54572 - "GET /sse HTTP/1.1" 200 OK INFO: 192.168.65.1:57034 - "GET /sse HTTP/1.1" 200 OK INFO: 192.168.65.1:57104 - "GET /sse HTTP/1.1" 200 OK INFO: 192.168.65.1:55411 - "POST /messages/?session_id=33fc2c041d184f3ab62597c69259d036 HTTP/1.1" 202 Accepted INFO: 192.168.65.1:55411 - "POST /messages/?session_id=33fc2c041d184f3ab62597c69259d036 HTTP/1.1" 202 Accepted INFO: 192.168.65.1:22986 - "POST /messages/?session_id=33fc2c041d184f3ab62597c69259d036 HTTP/1.1" 202 Accepted Processing request of type CallToolRequest 2025-10-29T16:57:52.864665 [info ] Cognify process starting. [cognee.shared.logging_utils] 2025-10-29T16:57:58.159329 [info ] Pipeline run started: `469d4729-328a-542d-aac1-3ea4167d9b83` [run_tasks_with_telemetry()] 2025-10-29T16:57:58.332811 [info ] Coroutine task started: `resolve_data_directories` [run_tasks_base] 2025-10-29T16:57:58.604008 [info ] Coroutine task started: `ingest_data` [run_tasks_base] 2025-10-29T16:57:58.899181 [info ] Registered loader: pypdf_loader [cognee.infrastructure.loaders.LoaderEngine] 2025-10-29T16:57:58.899637 [info ] Registered loader: text_loader [cognee.infrastructure.loaders.LoaderEngine] 2025-10-29T16:57:58.899817 [info ] Registered loader: image_loader [cognee.infrastructure.loaders.LoaderEngine] 2025-10-29T16:57:58.899964 [info ] Registered loader: audio_loader [cognee.infrastructure.loaders.LoaderEngine] 2025-10-29T16:57:58.900103 [info ] Registered loader: unstructured_loader [cognee.infrastructure.loaders.LoaderEngine] 2025-10-29T16:57:58.900197 [info ] Registered loader: advanced_pdf_loader [cognee.infrastructure.loaders.LoaderEngine] 2025-10-29T16:57:58.900321 [info ] Registered loader: beautiful_soup_loader [cognee.infrastructure.loaders.LoaderEngine] 2025-10-29T16:57:59.022385 [info ] Coroutine task completed: `ingest_data` [run_tasks_base] 2025-10-29T16:57:59.183862 [info ] Coroutine task completed: `resolve_data_directories` [run_tasks_base] 2025-10-29T16:57:59.347932 [info ] Pipeline run completed: `469d4729-328a-542d-aac1-3ea4167d9b83` [run_tasks_with_telemetry()] 2025-10-29T16:57:59.600721 [info ] Loaded JSON extension [cognee.shared.logging_utils] 2025-10-29T16:57:59.616370 [info ] Ontology file 'None' not found. No owl ontology will be attached to the graph. [OntologyAdapter] 2025-10-29T16:57:59.633495 [info ] Pipeline run started: `21372a56-1d44-5e19-a024-209d03a99218` [run_tasks_with_telemetry()] 2025-10-29T16:57:59.784198 [info ] Coroutine task started: `classify_documents` [run_tasks_base] 2025-10-29T16:57:59.933817 [info ] Coroutine task started: `check_permissions_on_dataset` [run_tasks_base] 2025-10-29T16:58:00.147315 [info ] Async Generator task started: `extract_chunks_from_documents` [run_tasks_base] 2025-10-29T16:58:00.366572 [info ] Coroutine task started: `extract_graph_from_data` [run_tasks_base] 2025-10-29T16:58:51.639973 [info ] No close match found for 'person' in category 'classes' [OntologyAdapter] 2025-10-29T16:58:51.642293 [info ] No close match found for 'khaélith orun' in category 'individuals' [OntologyAdapter] 2025-10-29T16:58:51.642456 [info ] No close match found for 'location' in category 'classes' [OntologyAdapter] 2025-10-29T16:58:51.642551 [info ] No close match found for 'vorrxundra' in category 'individuals' [OntologyAdapter] 2025-10-29T16:58:51.642632 [info ] No close match found for 'creature' in category 'classes' [OntologyAdapter] 2025-10-29T16:58:51.642739 [info ] No close match found for 'thirvalque' in category 'individuals' [OntologyAdapter] 2025-10-29T16:58:51.642823 [info ] No close match found for 'ossaryn' in category 'individuals' [OntologyAdapter] 2025-10-29T16:58:51.642928 [info ] No close match found for 'fyrneloch' in category 'individuals' [OntologyAdapter] 2025-10-29T16:58:51.643126 [info ] No close match found for 'mirror-river' in category 'individuals' [OntologyAdapter] 2025-10-29T16:58:51.643237 [info ] No close match found for 'zyrrhalin' in category 'individuals' [OntologyAdapter] 2025-10-29T16:58:51.643325 [info ] No close match found for 'artifact' in category 'classes' [OntologyAdapter] 2025-10-29T16:58:51.643410 [info ] No close match found for 'crystal plates' in category 'individuals' [OntologyAdapter] 2025-10-29T16:58:51.643468 [info ] No close match found for 'concept' in category 'classes' [OntologyAdapter] 2025-10-29T16:58:51.643551 [info ] No close match found for 'forgotten futures' in category 'individuals' [OntologyAdapter] 2025-10-29T16:58:52.968522 [info ] Coroutine task started: `summarize_text` [run_tasks_base] 2025-10-29T16:59:03.982055 [info ] Coroutine task started: `add_data_points` [run_tasks_base] 2025-10-29T16:59:04.884215 [info ] Coroutine task completed: `add_data_points` [run_tasks_base] 2025-10-29T16:59:05.038833 [info ] Coroutine task completed: `summarize_text` [run_tasks_base] 2025-10-29T16:59:05.200412 [info ] Coroutine task completed: `extract_graph_from_data` [run_tasks_base] 2025-10-29T16:59:05.361403 [info ] Async Generator task completed: `extract_chunks_from_documents` [run_tasks_base] 2025-10-29T16:59:05.529879 [info ] Coroutine task completed: `check_permissions_on_dataset` [run_tasks_base] 2025-10-29T16:59:05.694801 [info ] Coroutine task completed: `classify_documents` [run_tasks_base] 2025-10-29T16:59:05.852353 [info ] Pipeline run completed: `21372a56-1d44-5e19-a024-209d03a99218` [run_tasks_with_telemetry()] 2025-10-29T16:59:06.042754 [info ] Cognify process finished. [cognee.shared.logging_utils] INFO: 192.168.65.1:25778 - "POST /messages/?session_id=33fc2c041d184f3ab62597c69259d036 HTTP/1.1" 202 Accepted Processing request of type CallToolRequest 2025-10-29T17:01:03.372413 [info ] Graph projection completed: 17 nodes, 33 edges in 0.01s [CogneeGraph] 2025-10-29T17:01:03.790022 [info ] Vector collection retrieval completed: Retrieved distances from 6 collections in 0.07s [cognee.shared.logging_utils] ``` </details> ## Type of Change <!-- Please check the relevant option --> - [ ] Bug fix (non-breaking change that fixes an issue) - [ ] New feature (non-breaking change that adds functionality) - [ ] Breaking change (fix or feature that would cause existing functionality to change) - [ ] Documentation update - [ ] Code refactoring - [ ] Performance improvement - [ ] Other (please specify): ## Screenshots/Videos (if applicable) <!-- Add screenshots or videos to help explain your changes --> ## Pre-submission Checklist <!-- Please check all boxes that apply before submitting your PR --> - [ ] **I have tested my changes thoroughly before submitting this PR** - [ ] **This PR contains minimal changes necessary to address the issue/feature** - [ ] My code follows the project's coding standards and style guidelines - [ ] I have added tests that prove my fix is effective or that my feature works - [ ] I have added necessary documentation (if applicable) - [ ] All new and existing tests pass - [ ] I have searched existing PRs to ensure this change hasn't been submitted already - [ ] I have linked any relevant issues in the description - [ ] My commits have clear and descriptive messages ## DCO Affirmation I affirm that all code in every commit of this pull request conforms to the terms of the Topoteretes Developer Certificate of Origin. |

||

|---|---|---|

| .github | ||

| alembic | ||

| assets | ||

| bin | ||

| cognee | ||

| cognee-frontend | ||

| cognee-mcp | ||

| cognee-starter-kit | ||

| deployment | ||

| distributed | ||

| evals | ||

| examples | ||

| licenses | ||

| logs | ||

| notebooks | ||

| tools | ||

| working_dir_error_replication | ||

| .dockerignore | ||

| .env.template | ||

| .gitattributes | ||

| .gitguardian.yml | ||

| .gitignore | ||

| .pre-commit-config.yaml | ||

| .pylintrc | ||

| alembic.ini | ||

| CODE_OF_CONDUCT.md | ||

| CONTRIBUTING.md | ||

| CONTRIBUTORS.md | ||

| DCO.md | ||

| docker-compose.yml | ||

| Dockerfile | ||

| entrypoint.sh | ||

| LICENSE | ||

| mypy.ini | ||

| NOTICE.md | ||

| poetry.lock | ||

| pyproject.toml | ||

| README.md | ||

| SECURITY.md | ||

| uv.lock | ||

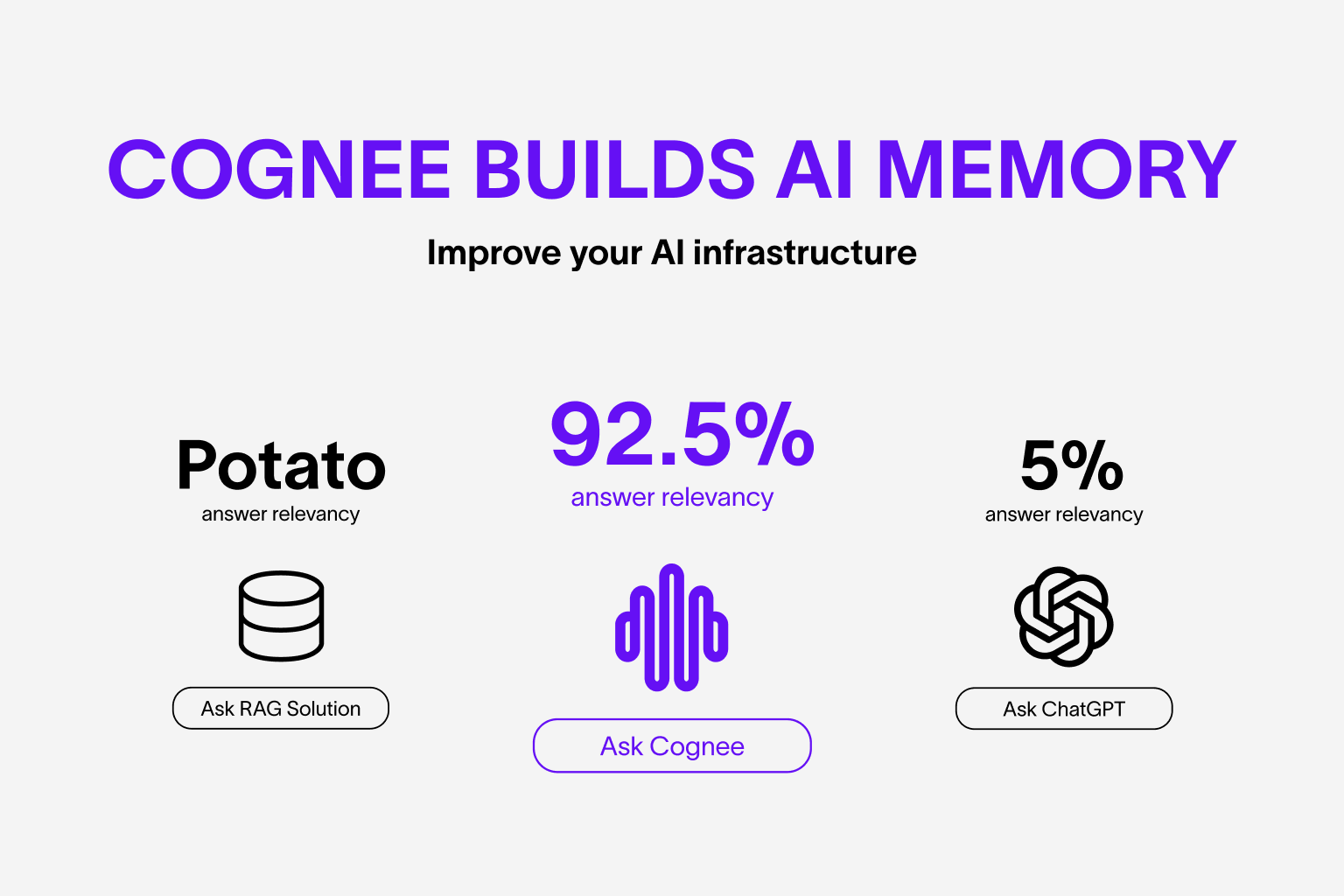

cognee - Memory for AI Agents in 6 lines of code

Demo . Learn more · Join Discord · Join r/AIMemory . Docs . cognee community repo

Build dynamic memory for Agents and replace RAG using scalable, modular ECL (Extract, Cognify, Load) pipelines.

🌐 Available Languages : Deutsch | Español | français | 日本語 | 한국어 | Português | Русский | 中文

Get Started

Get started quickly with a Google Colab notebook , Deepnote notebook or starter repo

About cognee

cognee works locally and stores your data on your device. Our hosted solution is just our deployment of OSS cognee on Modal, with the goal of making development and productionization easier.

Self-hosted package:

- Interconnects any kind of documents: past conversations, files, images, and audio transcriptions

- Replaces RAG systems with a memory layer based on graphs and vectors

- Reduces developer effort and cost, while increasing quality and precision

- Provides Pythonic data pipelines that manage data ingestion from 30+ data sources

- Is highly customizable with custom tasks, pipelines, and a set of built-in search endpoints

Hosted platform:

- Includes a managed UI and a hosted solution

Self-Hosted (Open Source)

📦 Installation

You can install Cognee using either pip, poetry, uv or any other python package manager.

Cognee supports Python 3.10 to 3.12

With uv

uv pip install cognee

Detailed instructions can be found in our docs

💻 Basic Usage

Setup

import os

os.environ["LLM_API_KEY"] = "YOUR OPENAI_API_KEY"

You can also set the variables by creating .env file, using our template. To use different LLM providers, for more info check out our documentation

Simple example

Python

This script will run the default pipeline:

import cognee

import asyncio

async def main():

# Add text to cognee

await cognee.add("Cognee turns documents into AI memory.")

# Generate the knowledge graph

await cognee.cognify()

# Add memory algorithms to the graph

await cognee.memify()

# Query the knowledge graph

results = await cognee.search("What does cognee do?")

# Display the results

for result in results:

print(result)

if __name__ == '__main__':

asyncio.run(main())

Example output:

Cognee turns documents into AI memory.

Via CLI

Let's get the basics covered

cognee-cli add "Cognee turns documents into AI memory."

cognee-cli cognify

cognee-cli search "What does cognee do?"

cognee-cli delete --all

or run

cognee-cli -ui

Hosted Platform

Get up and running in minutes with automatic updates, analytics, and enterprise security.

- Sign up on cogwit

- Add your API key to local UI and sync your data to Cogwit

Demos

- Cogwit Beta demo:

- Simple GraphRAG demo

- cognee with Ollama

Contributing

Your contributions are at the core of making this a true open source project. Any contributions you make are greatly appreciated. See CONTRIBUTING.md for more information.

Code of Conduct

We are committed to making open source an enjoyable and respectful experience for our community. See CODE_OF_CONDUCT for more information.

Citation

We now have a paper you can cite:

@misc{markovic2025optimizinginterfaceknowledgegraphs,

title={Optimizing the Interface Between Knowledge Graphs and LLMs for Complex Reasoning},

author={Vasilije Markovic and Lazar Obradovic and Laszlo Hajdu and Jovan Pavlovic},

year={2025},

eprint={2505.24478},

archivePrefix={arXiv},

primaryClass={cs.AI},

url={https://arxiv.org/abs/2505.24478},

}