| .github | ||

| alembic | ||

| assets | ||

| bin | ||

| cognee | ||

| cognee-frontend | ||

| cognee-mcp | ||

| cognee-starter-kit | ||

| deployment | ||

| distributed | ||

| evals | ||

| examples | ||

| licenses | ||

| logs | ||

| notebooks | ||

| tools | ||

| working_dir_error_replication | ||

| .dockerignore | ||

| .env.template | ||

| .gitattributes | ||

| .gitguardian.yml | ||

| .gitignore | ||

| .pre-commit-config.yaml | ||

| .pylintrc | ||

| AGENTS.md | ||

| alembic.ini | ||

| CODE_OF_CONDUCT.md | ||

| CONTRIBUTING.md | ||

| CONTRIBUTORS.md | ||

| DCO.md | ||

| docker-compose.yml | ||

| Dockerfile | ||

| entrypoint.sh | ||

| LICENSE | ||

| mypy.ini | ||

| NOTICE.md | ||

| poetry.lock | ||

| pyproject.toml | ||

| README.md | ||

| SECURITY.md | ||

| uv.lock | ||

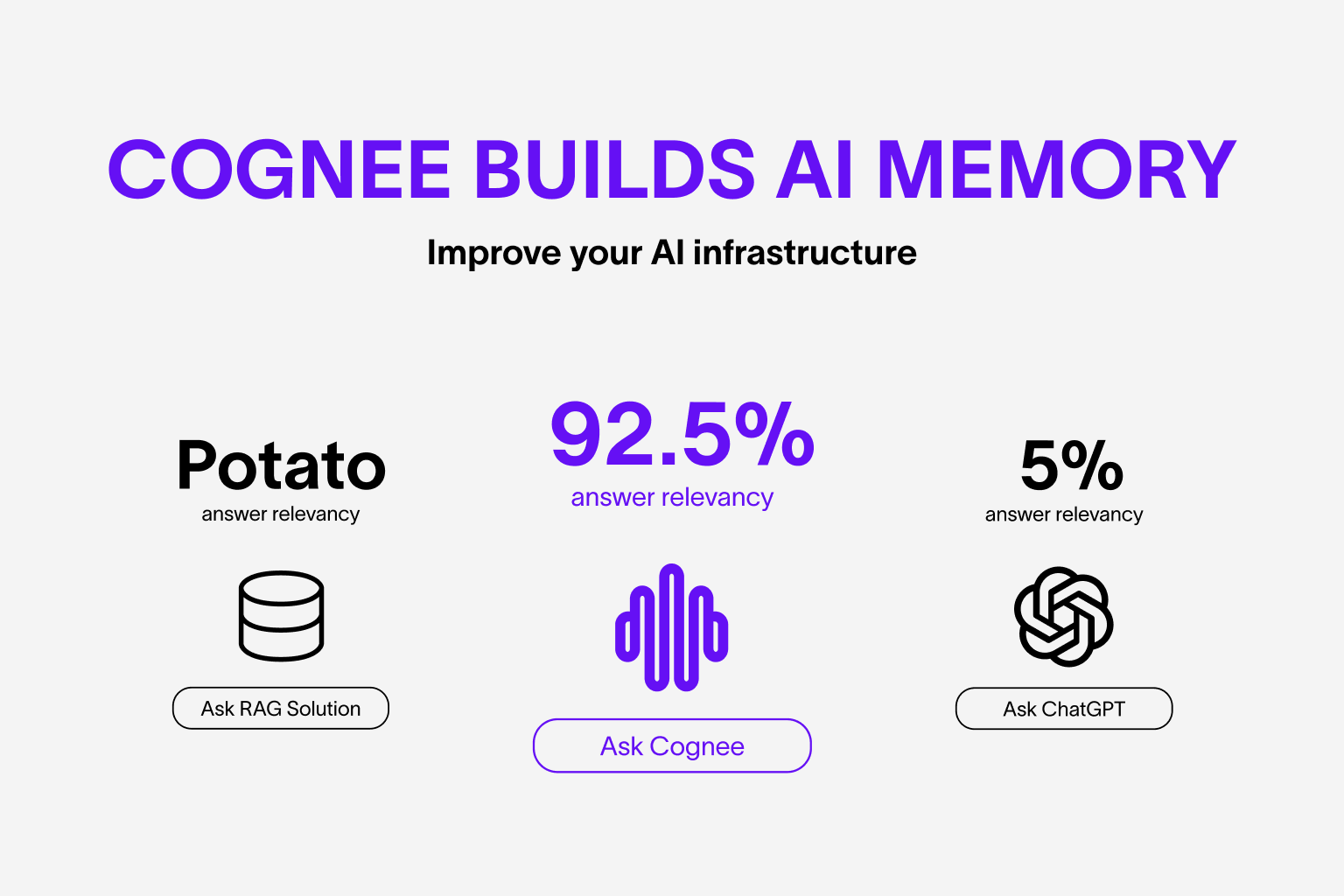

Cognee - Graph and Vector Memory for AI Agents

Demo . Docs . Learn More · Join Discord · Join r/AIMemory . Integrations

Persistent and accurate memory for AI agents. With Cognee, your AI agent understands, reasons, and adapts.

🌐 Available Languages : Deutsch | Español | Français | 日本語 | 한국어 | Português | Русский | 中文

Quickstart

- 🚀 Try it now on Google Colab

- 📓 Explore our Deepnote Notebook

- 🛠️ Clone our Starter Repo

About Cognee

Cognee transforms your data into a living knowledge graph that learns from feedback and auto-tunes to deliver better answers over time.

Run anywhere:

- 🏠 Self-Hosted: Runs locally, data stays on your device

- ☁️ Cognee Cloud: Same open-source Cognee, deployed on Modal for seamless workflows

Self-Hosted Package:

- Unified memory for all your data sources

- Domain-smart copilots that learn and adapt over time

- Flexible memory architecture for AI agents and devices

- Integrates easily with your current technology stack

- Pythonic data pipelines supporting 30+ data sources out of the box

- Fully extensible: customize tasks, pipelines, and search endpoints

Cognee Cloud:

- Get a managed UI and Hosted Infrastructure with zero setup

Self-Hosted (Open Source)

Run Cognee on your stack. Cognee integrates easily with your current technologies. See our integration guides.

📦 Installation

Install Cognee with pip, poetry, uv, or your preferred Python package manager.

Requirements: Python 3.10 to 3.12

Using uv

uv pip install cognee

For detailed setup instructions, see our Documentation.

💻 Usage

Configuration

import os

os.environ["LLM_API_KEY"] = "YOUR OPENAI_API_KEY"

Alternatively, create a .env file using our template.

To integrate other LLM providers, see our LLM Provider Documentation.

Python Example

Run the default pipeline with this script:

import cognee

import asyncio

async def main():

# Add text to cognee

await cognee.add("Cognee turns documents into AI memory.")

# Generate the knowledge graph

await cognee.cognify()

# Add memory algorithms to the graph

await cognee.memify()

# Query the knowledge graph

results = await cognee.search("What does cognee do?")

# Display the results

for result in results:

print(result)

if __name__ == '__main__':

asyncio.run(main())

Example output:

Cognee turns documents into AI memory.

CLI Example

Get started with these essential commands:

cognee-cli add "Cognee turns documents into AI memory."

cognee-cli cognify

cognee-cli search "What does cognee do?"

cognee-cli delete --all

Or run:

cognee-cli -ui

Cognee Cloud

Cognee is the fastest way to start building reliable AI agent memory. Deploy in minutes with automatic updates, analytics, and enterprise-grade security.

- Sign up on Cognee Cloud

- Add your API key to local UI and sync your data to Cognee Cloud

- Start building with managed infrastructure and zero configuration

Trusted in Production

From regulated industries to startup stacks, Cognee is deployed in production and delivering value now. Read our case studies to learn more.

Demos & Examples

See Cognee in action:

Cognee Cloud Beta Demo

Simple GraphRAG Demo

Cognee with Ollama

Community & Support

Contributing

We welcome contributions from the community! Your input helps make Cognee better for everyone. See CONTRIBUTING.md to get started.

Code of Conduct

We're committed to fostering an inclusive and respectful community. Read our Code of Conduct for guidelines.

Research & Citation

Cite our research paper on optimizing knowledge graphs for LLM reasoning:

@misc{markovic2025optimizinginterfaceknowledgegraphs,

title={Optimizing the Interface Between Knowledge Graphs and LLMs for Complex Reasoning},

author={Vasilije Markovic and Lazar Obradovic and Laszlo Hajdu and Jovan Pavlovic},

year={2025},

eprint={2505.24478},

archivePrefix={arXiv},

primaryClass={cs.AI},

url={https://arxiv.org/abs/2505.24478},

}