cognee - Memory for AI Agents in 5 lines of code

Demo . Learn more · Join Discord · Join r/AIMemory . Docs . cognee community repo

[](https://GitHub.com/topoteretes/cognee/network/) [](https://GitHub.com/topoteretes/cognee/stargazers/) [](https://GitHub.com/topoteretes/cognee/commit/) [](https://github.com/topoteretes/cognee/tags/) [](https://pepy.tech/project/cognee) [](https://github.com/topoteretes/cognee/blob/main/LICENSE) [](https://github.com/topoteretes/cognee/graphs/contributors) Build dynamic memory for Agents and replace RAG using scalable, modular ECL (Extract, Cognify, Load) pipelines.

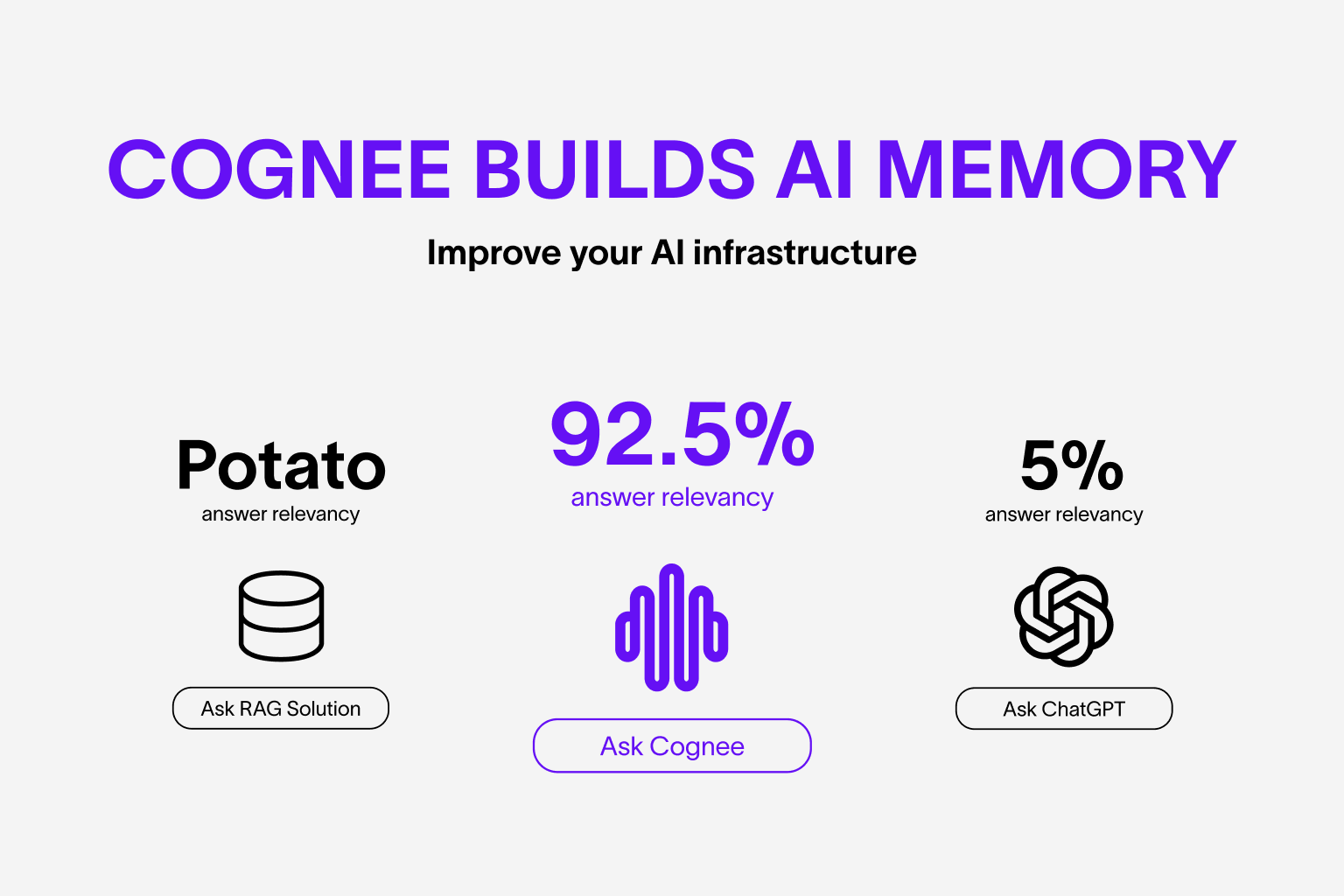

Build dynamic memory for Agents and replace RAG using scalable, modular ECL (Extract, Cognify, Load) pipelines.

🌐 Available Languages : Deutsch | Español | français | 日本語 | 한국어 | Português | Русский | 中文