Open-source framework for creating self-improving deterministic outputs for LLMs.

Try it in a Google collab notebook or have a look at our documentation If you have questions, join our Discord community ## 📦 Installation ### With pip ```bash pip install cognee ``` ### With poetry ```bash poetry add cognee ``` ## 💻 Usage ### Setup ``` import os os.environ["LLM_API_KEY"] = "YOUR OPENAI_API_KEY" ``` or ``` import cognee cognee.config.llm_api_key = "YOUR_OPENAI_API_KEY" ``` If you are using Networkx, create an account on Graphistry to visualize results: ``` cognee.config.set_graphistry_username = "YOUR_USERNAME" cognee.config.set_graphistry_password = "YOUR_PASSWORD" ``` To run the UI, run: ``` docker-compose up cognee ``` Then navigate to localhost:3000/wizard You can also use Ollama or Anyscale as your LLM provider. For more info on local models check our [docs](https://topoteretes.github.io/cognee) ### Run ``` import cognee text = """Natural language processing (NLP) is an interdisciplinary subfield of computer science and information retrieval""" await cognee.add([text], "example_dataset") # Add a new piece of information await cognee.cognify() # Use LLMs and cognee to create knowledge await search_results = cognee.search("SIMILARITY", {'query': 'Tell me about NLP'}) # Query cognee for the knowledge print(search_results) ``` Add alternative data types: ``` cognee.add("file://{absolute_path_to_file}", dataset_name) ``` Or ``` cognee.add("data://{absolute_path_to_directory}", dataset_name) # This is useful if you have a directory with files organized in subdirectories. # You can target which directory to add by providing dataset_name. # Example: # root # / \ # reports bills # / \ # 2024 2023 # # cognee.add("data://{absolute_path_to_root}", "reports.2024") # This will add just directory 2024 under reports. ``` Read more [here](docs/index.md#run). ## Vector retrieval, Graphs and LLMs Cognee supports a variety of tools and services for different operations: - **Local Setup**: By default, LanceDB runs locally with NetworkX and OpenAI. - **Vector Stores**: Cognee supports Qdrant and Weaviate for vector storage. - **Language Models (LLMs)**: You can use either Anyscale or Ollama as your LLM provider. - **Graph Stores**: In addition to LanceDB, Neo4j is also supported for graph storage. ## Demo Check out our demo notebook [here](https://github.com/topoteretes/cognee/blob/main/notebooks/cognee%20-%20Get%20Started.ipynb) [ ](https://www.youtube.com/watch?v=BDFt4xVPmro "Learn about cognee: 55")

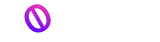

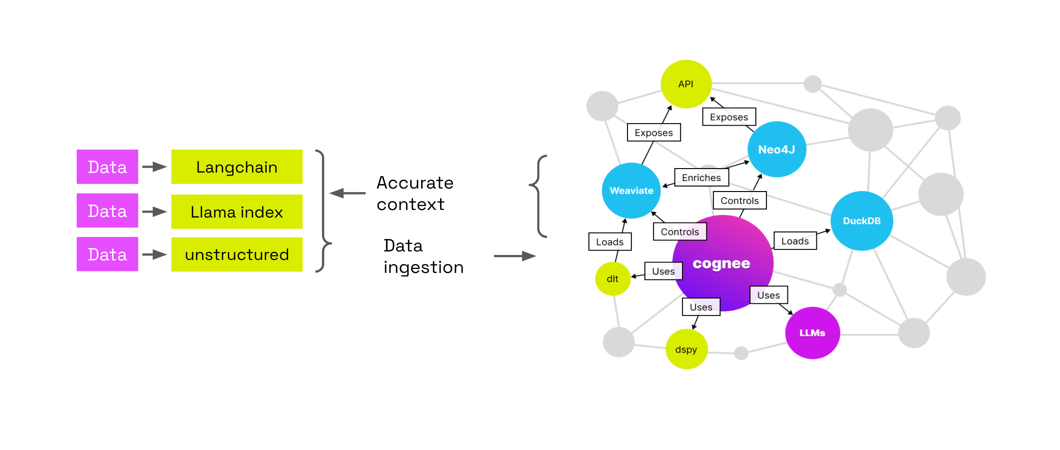

## How it works

## Star History

[](https://star-history.com/#topoteretes/cognee&Date)

](https://www.youtube.com/watch?v=BDFt4xVPmro "Learn about cognee: 55")

## How it works

## Star History

[](https://star-history.com/#topoteretes/cognee&Date)